QA engineers at OrbitSoft test each product in several stages: smoke testing → functional → regression → and acceptance testing. Through careful testing, a customer receives the functionality they expect. In this article, we describe our work process in detail.

Stage 1: Requirements Analysis and Documentation

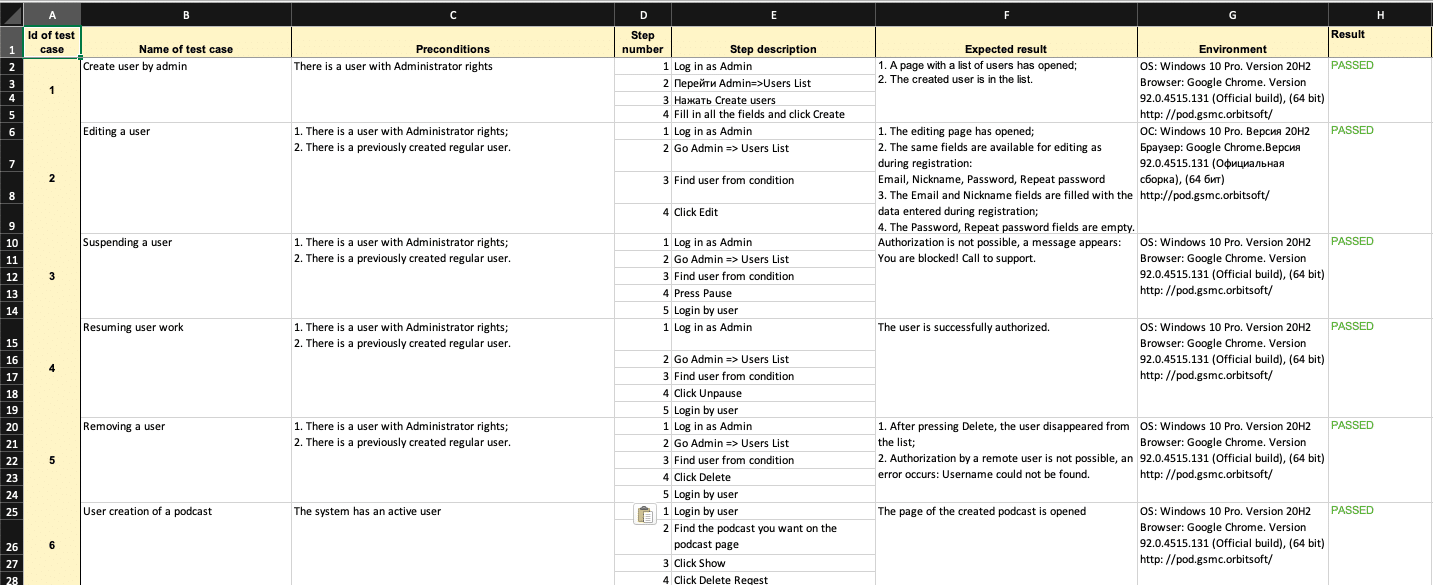

Testing starts after product development finishes. Before starting work, testers prepare documentation: a test plan, checklists, and test cases.

The test plan is a document describing the tested functions, strategies, and starting and ending dates of testing.

The checklist is a list of checks for testing a product.

The test case is a description of a task from a checklist with an expected result, and a set of preconditions and input values.

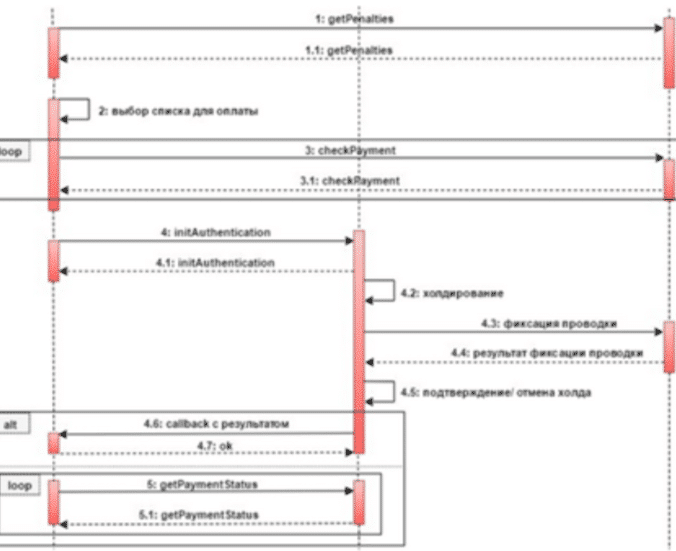

Testers write a test plan based on the terms of reference which were drawn up by the developers. For example, based on the terms of reference for the form of payment on the site.

Stage 2: Smoke testing

To find obvious bugs, we run black box testing. This process is also called smoke testing, The main goal is to check the stability of the system as a whole.

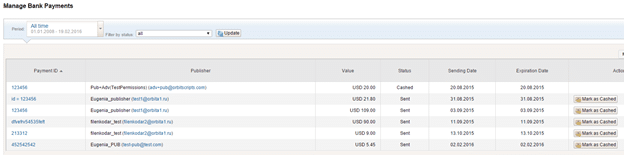

For example, a project won’t be able to make money if the system isn’t able to process payments. To make sure that everything works, testers go to the page with payments and check whether the system outputs correctly or not.

If this stage is passed successfully, the tester moves on. If the tester finds an error, they create a task for developers to revise.

Stage 3: Functional testing

At this stage, we check how the functions correspond to the terms of reference: we look at functionality, accessibility, usability, and the user interface. The main goal is to test the logic of the operation of each function on different data sets and under different conditions. The result of functional testing will be a description of all inconsistencies and errors.

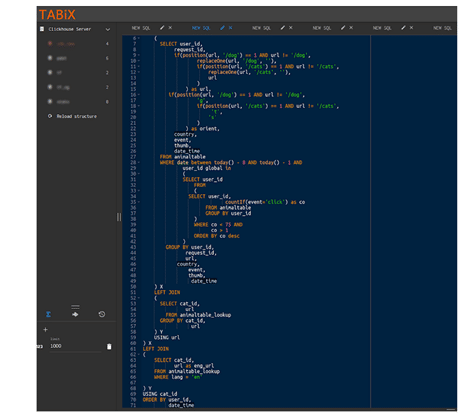

If the result during testing differs from the desired outcome, and the cause of the error isn’t obvious, engineers form an SQL query into the database, or refer to the log files for the system under test for additional information.

Stage 4: Regression testing

After testers find and describe inconsistencies, regression testing is performed.

This stage is divided into two parts. The first part is to check the corrections of errors found in the previous stage, and the second is to recheck all the functions. This must be done, as after fixing bugs there is no guarantee that the component that was checked earlier won’t break with the new corrections.

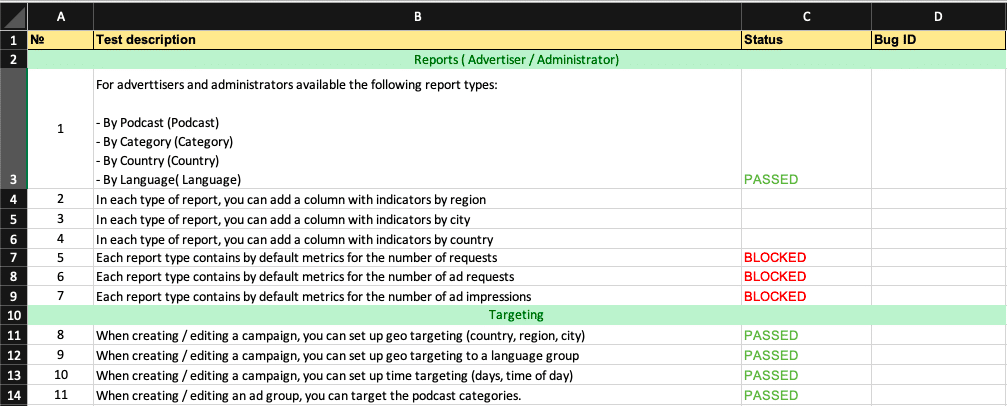

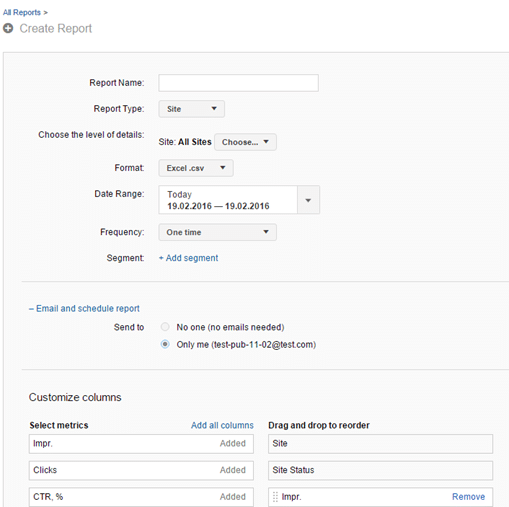

For example, say developers have fixed a bug in the display of the calendar, and now dates are in a single format. This fix may have affected performance. Testers go to the admin panel and check the functions, e.g., whether reports are now created with dates in the required format, and whether all types of reports are available.

If a function fails, testers create a task for the developers to fix the bug. After the correction, a second check will be performed. If everything works and there are no more bugs, no improvements will be needed. Then we move on to the final stage of testing.

Stage 5: acceptance testing

Final testing before release. I’is carried out in order to check how the script corresponds to the task described by the customer. Acceptance testing verifies core functionality.

For example: the site opens on different devices, the user can send contacts through the form, a video plays, a banners open.

When the development is completed and all tests have been passed successfully, at the request of the customer, QA engineers conduct production testing on the customer’s equipment.

Comparison of test types

Smoke-testing. First tests. The main goal is to check the stability of the system, so that we may proceed with further development and more detailed testing of functions. Custom scripts. Non-functional requirements. Test plan, checklists, test cases. Black box model.

Functional testing. When the individual functions of the system are implemented. Confirms that the system meets the requirements according to the terms of reference. All the logic of the implemented functions: accessibility, usability, user interface, database API. Performance testing (load testing, stress testing, stability testing), installation testing, security testing, configuration testing. Test plan, checklists, test cases. Black and gray box model, database requests, API requests.

Regression testing. After the functional testing phase. Checks that system works correctly after modifications. Correctness of fixes and improvements of defects that were found at the previous stage. All functions are being revised so that new fixes do not affect already-tested system functions. Functions that worked correctly in the previous step, if none of the developer fixes could affect the state and logic of these functions. Test plan, checklists, test cases. Black and gray box model, database requests, API requests.

Acceptance testing. Final testing before the delivery of project to the customer. Confirms that developers have implemented everything as requested by the customer. User’s interface scenario check. Each function separately. Test plan, checklists, test cases. Black box model.

Examples from OrbitSoft projects

| Customer | Project | Tests performed |

| European video entertainment service | Add a new ad slider feature to the Orbit Ad Server online ad management system | On the advertiser’s side we tested: internal content formats. On the publisher’s side: advertising settings, types of display ads. On the side of the administrator, advertiser and publisher, we checked how the system of monetary settlements works, setting up reports. On the side of the system: creating and displaying ads, moderation, downloading videos of any formats, placing links for pulling videos from a third-party service, correct operation of the online advertising management system when changing settings and adding new functions, service availability — all operating systems, browsers, VPN, and under high load (traffic). |

| Advertising agency «Playmedia» | Change site functions: change the script for displaying ads on the site so that the ad is not visible immediately, but at the moment of scrolling through the page to the desired content | We tested the scripts for displaying all types of advertisements in accordance with the terms of reference in different parts of the web page. Through the browser console, we checked the operation of the script, which determines at which moment the advertisement is loaded. |

Technical block

SQL-requests to the database

Searching and reading logs via the Kibana web interface

Logging: collecting logs — Logstash, processing logs — Elasticsearch

Deployment of test benches:

Web Servers: Nginx, Apache Kafka, Apache ZooKeeper, Apache Cassanda

Server Data Base: MySQL, PostgreSQL, MariaDB

Environment to run (compile): Visual Studio

Programming languages: C#, Python, Ruby